Evaluation-Driven Development (EDD) enables teams to measure real progress, validate outcomes, and de-risk every decision across the entire AI development lifecycle. It enables teams to achieve the quality, consistency, accuracy and reliability needed to put AI products in production.

Our methodology is built on the principle that understanding how to measure success is more important than building the system itself. By defining evaluation criteria upfront, we ensure that every AI system we build meets real user needs and business objectives.

Our Evaluation-Driven Development approach combines deep technical expertise, structured processes, and powerful internal tools to continuously measure, improve, and align AI performance with real-world goals.

Whether you’re deploying LLM-based assistants, retrieval-augmented search, classification models, or multi-agent workflows, our offering ensures your AI doesn’t just function, it progresses.

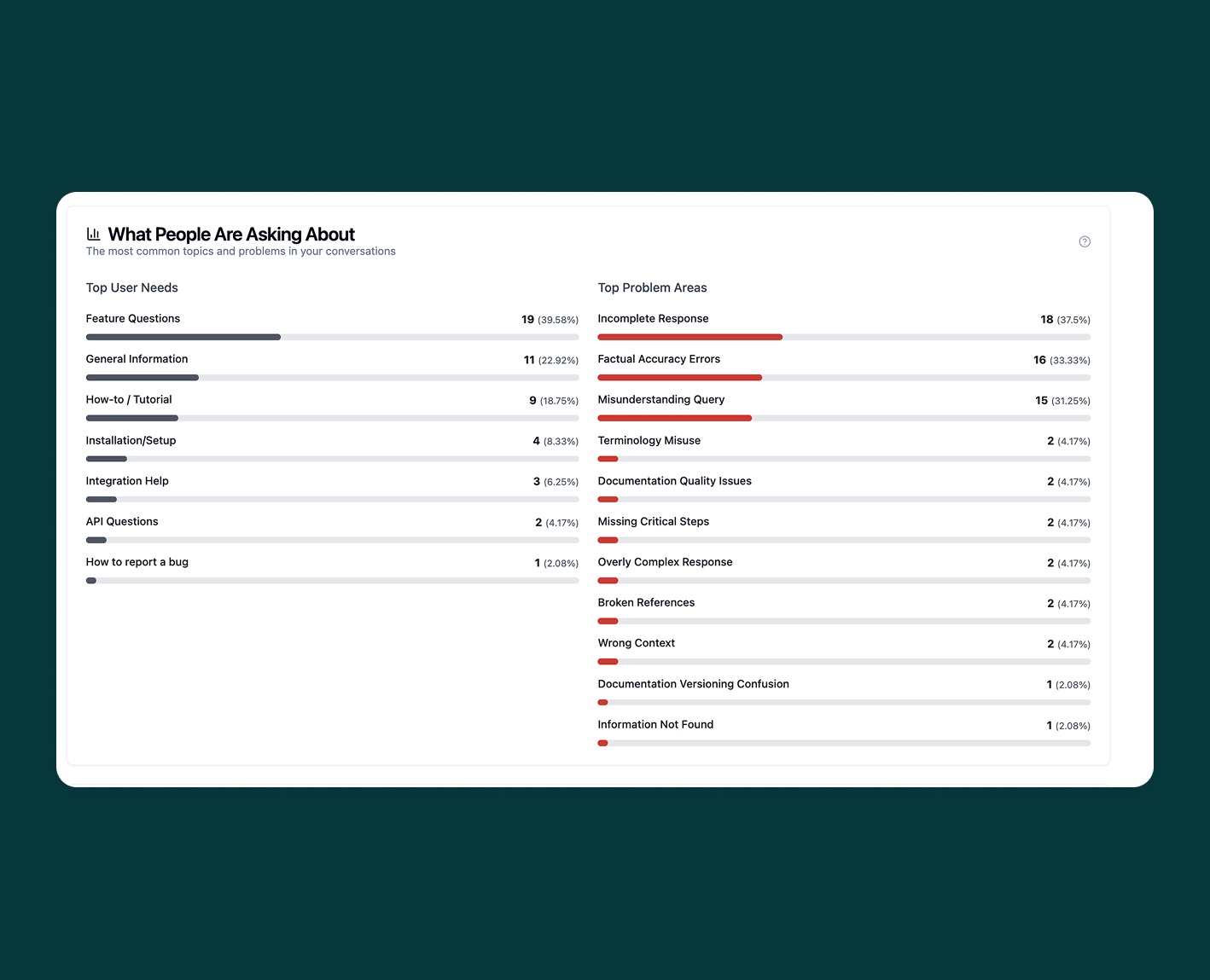

We define success early across business, user, and technical goals. Then we continuously analyze system outputs and user interactions to identify what’s working, what’s failing, and what’s missing.

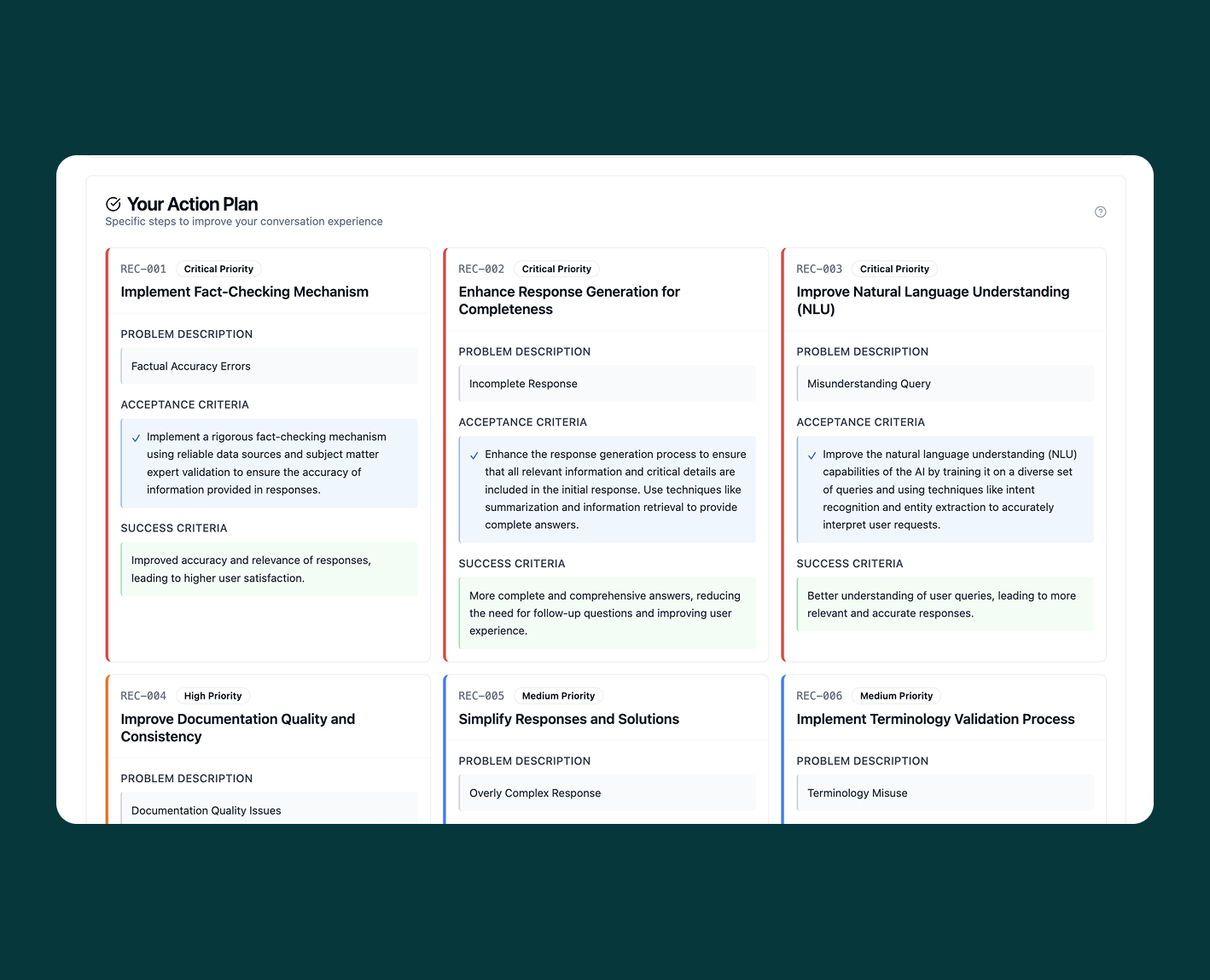

Every signal, feedback, logs, outputs, edge cases, or user behaviour—is treated as a learning opportunity. We turn these into structured insights and prioritised plans for improvement.

Success is defined upfront across business, user, and technical goals. We then monitor outputs and interactions to uncover what works, what breaks, and what’s missing.

We define success upfront across business, user, and technical dimensions. Then we continuously analyse output patterns, system behaviour, and user interactions, structured or unstructured, textual or task-based, to surface what’s working, what’s breaking, and what’s missing.

Whether it’s feedback, logs, system outputs, edge cases, or user behaviour, every signal is a learning opportunity. We capture, interpret, and structure these signals into actionable insights and prioritised improvement plans.

We empower your teams to generate better training data, evaluate outputs at scale, and maintain control over evolving systems. Whether you're refining prompts or validating multi-agent behaviours, we enable structured human feedback and collaborative quality control.

We implement Evaluation-Driven Development through a structured, phased framework—designed to reduce risk, accelerate learning, and deliver lasting impact. Here's how we turn strategy into execution:

We start with clarity. Together, we assess your current state, define what success looks like, and align all stakeholders around shared goals and expectations.

We build the foundation for continuous evaluation. This ensures that everything we develop can be measured, monitored, and improved right from the start.

We move fast but with constant feedback. Development is driven by data, validated through evaluation, and continuously refined to improve performance and reliability.

Once in production, the learning doesn’t stop. We keep the system healthy, aligned, and improving by tracking live performance and closing the loop with user feedback.

Explore our cutting-edge AI solutions and success stories.