A large international machine manufacturer operates 200+ customer sites with roughly 5,800 machines in total. Each device captures sensor readings every second, adding up to billions of sensor events every month.

Alongside machine manufacturing, the company offers an ongoing lifecycle support service designed to help customers maximise output, ensure business continuity, and run at peak efficiency. But delivering this service required deep, data-driven insights, and the data wasn’t easily accessible.

- Sensor data was stored in base64-encoded, zipped JSON formats, making it extremely hard to process.

- Historical data spanned over 5 years of files sitting in Azure Blob Storage.

- Querying or comparing performance across machines required manual, time-consuming preprocessing.

- A traditional database approach would have been prohibitively expensive (>€1,000/month) at this scale.

The goal of the project was to build a data backbone that the service teams and analysts could easily retrieve actionable insights from. The manufacturer needed a system that could handle both years of historical backlog and daily incoming data, while keeping costs predictable and enabling fast access to insights for lifecycle support services.

The Solution: A Serverless, Query-Ready Data Architecture

To achieve our client’s goal, we designed and implemented a fully automated, serverless ETL pipeline on Azure, optimised for incremental processing and cost efficiency.

The solution has the following core components:

- Prepare data for efficient filtering

The source data arrived as base64-encoded, zipped nested JSON files. We converted this into Parquet, a standardised columnar format that is both compact and optimised for analytics. This transformation made the data easy to query and much faster to process. - Structuring data for efficient filtering

We organised the processed files in Azure Blob Storage with a clear hierarchy of site, room, machine, year, month, and day. This structure allows queries to filter by these parameters before opening any file, which drastically reduces the amount of data scanned and improves performance. - Enabling direct queries without ingestion

Instead of ingesting terabytes of data into a database, we connected Azure Data Explorer directly to Blob Storage. This approach avoids the cost and overhead of database ingestion while still enabling sub-second query times, even across millions of files. - Automating daily parallel processing

The pipeline is triggered automatically every night at midnight to pick up the previous day’s new data. Each site is processed independently in parallel using containerised jobs, ensuring the system scales seamlessly across more than 200 sites and over 5,800 machines.

Cost Efficiency at Scale

From day one, the architecture was built with cost optimisation as a core design goal, without sacrificing performance.

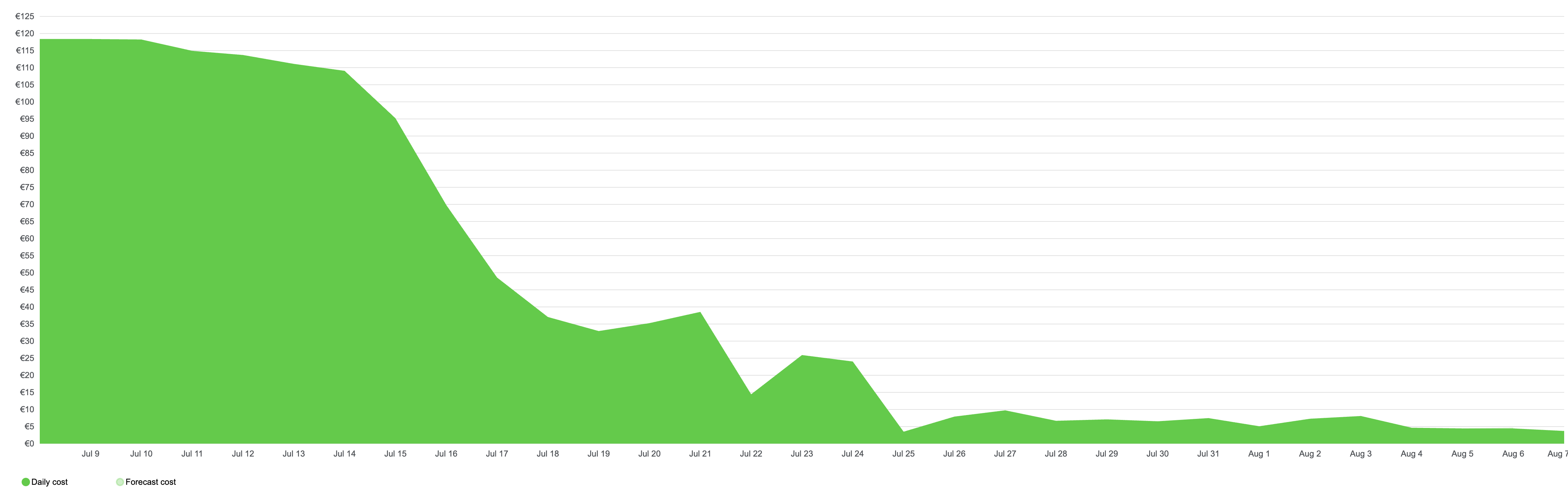

After the initial heavy lifting of processing five years of historical sensor data, a job that ran for about ten days and cost a few thousand euros, the system seamlessly transitioned into its steady operating rhythm. From that point onward, the daily cost of operating dropped to just €6–8 for more than 200 sites and 5,800 machines due to our efficient serverless architecture. Despite the scale of over 3 million files and 244 gigabytes of data, targeted queries still return results in less than a second.

By avoiding traditional database ingestion and server infrastructure, the architecture delivers the highest level of performance while saving more than €1,000 per month in operational costs.

The Outcome

The new architecture unlocked a data foundation that powers the manufacturer’s lifecycle support service:

- Service teams can query years of machine performance in less than a second.

- Customers receive actionable insights on efficiency, maintenance, and output improvements.

- The solution scales globally with minimal operational overhead.

Massive IoT data doesn’t require heavyweight infrastructure. With the right design, you can achieve sub-second query performance over hundreds of gigabytes, while keeping costs at just a few euros a day.