When GPT-5 was announced, it was billed as the closest step yet toward AGI; the pinnacle of model development, arriving after months of anticipation. Marketing materials, demos, and second-hand commentary flooded in, bolstered by impressive results on popular maths and coding benchmarks.

These benchmarks may look convincing, but they measure how a model solves clean, well-defined problems with clear right-or-wrong answers, conditions that rarely exist in the real world. In business environments, queries are messy, data is fragmented, and context is everything.

For an enterprise, it doesn’t matter how a model scores on a public leaderboard. What matters is how it performs in your architecture, with your processes, on your data. That’s the only benchmark that matters.

That is why Evaluation-Driven Development (EDD) is essential. EDD ensures that you build your AI system on a foundation full of observability agents, automated evaluation tools, and feedback loops, which enables you to benchmark AI models under production-like conditions. It gives you empirical, context-specific results, showing whether a new model actually moves the baseline or just looks good on paper.

At Faktion, we have multiple agentic & knowledge assistants in production across various industries, built upon our own proprietary Evaluation Driven Development (EDD). As a result, we could immediately benchmark GPT-5 across these knowledge assistants to evaluate if it really moves the baseline in real-world use cases; instead of taking GPT-5’s public performance at face value.

The Test Case

Our research question was simple but critical:

Does GPT-5 meaningfully outperform previous-generation models in real-world enterprise use cases, and if not, which combinations of model and agent architecture actually deliver the best results?

This matters because model choice is rarely made in isolation in enterprise AI. A model’s output is shaped by the agent architecture around it; the orchestration layer that decides how queries are processed, how the model is called, and how answers are synthesised. A model that excels in one architecture may underperform in another.

We tested this in the context of a multi-agent knowledge assistant for a large enterprise, where users ask complex, domain-specific questions and the system must interpret intent and deliver complete, context-aware answers. A misinterpretation can cause delays, misinformation, or costly mistakes, which is why we measured performance using the Query Understanding Score. This metric assesses how well a system grasps user intent and returns a fully relevant, accurate answer.

Methodology

- Dataset: A curated dataset with real-world queries sourced from production data.

- Models tested

- GPT-5 (Standard, Mini)

- GPT-4.1 (Standard, Mini, Nano)

- GPT-4o Mini

- Scope: Query Understanding Score, isolating pure model comprehension without the influence of retrieval quality. Context retrieval was deliberately excluded from this study, as retrieval quality is a separate variable we evaluate independently.

Why two architectures?

We wanted to determine not just which model performs best, but which performs best for a given orchestration style:

- Basic Agent: Single-pass design. Minimal reasoning, retrieves and answers in one go. Optimised for speed.

- Research Agent: Multi-step reasoning. Breaks queries into sub-questions, runs them in parallel, then synthesises results. More methodical but more dependent on the model’s reasoning style.

By running each model under both architectures, we could see how model behaviour interacts with agent design and whether a smaller, cheaper model could outperform a larger one when paired correctly.

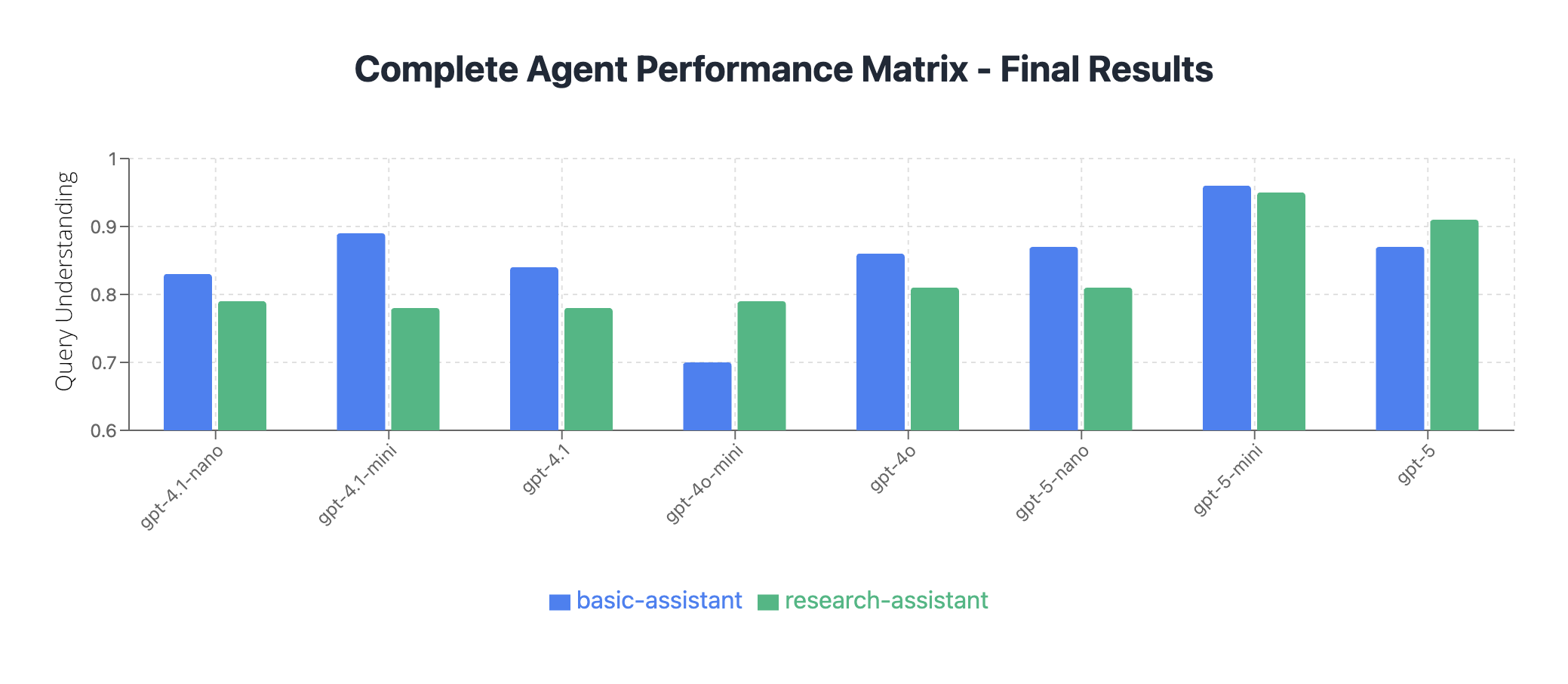

The Results: Agent Architecture is more important than model size

The numbers tell an important story. When we look at best performance for each pairing, certain combinations absolutely shine. GPT-5 Mini with the Basic Agent, for example, hits the highest score of all tests.

Performance is highly dependent on the compatibility between the model and the agent, not on raw compute power or model size.

Top Performers:

- GPT-5 Mini + Basic Agent → 0.96 (highest score overall)

- GPT-5 + research-assistant → 0.91 (research agent champion)

- GPT-4o + basic-assistant → 0.86 (solid speed-quality option)

Ones to Avoid:

- GPT-4o Mini + Basic Agent → 0.67 (major compatibility issues)

Agent-Specific Patterns:

- Basic Agent: More volatile (0.67–0.96) but capable of higher peaks when paired well.

- Research Agent: More consistent (0.78–0.95) but slightly lower peaks.

Note that the results are only specific to this use case and data, and it doesn’t mean you would get similar results for another use case.

Key Learnings

- Model size does not predict performance

Smaller models can outperform larger ones. GPT-5 Mini scored 0.96 with Basic Agent, beating all larger models tested. - Performance is about architectural harmony

Our findings show that enterprise AI success comes from model–architecture synergy, not just raw compute power. The agent’s design has a stronger impact on performance than the model’s size. Basic Agent shows very volatile results, while Research Agent is more robust and steadier and shows more consistent results. The right architecture can elevate a smaller model above a larger one. - Bigger isn’t always better

Larger models can introduce complexity that some agent designs can’t fully leverage. Well-defined architectures can make smaller, more predictable models just as, if not more effective. - Design and test the “brain” and “workflow” together

The AI model is the brain; the agent is the workflow. They should be optimised as a unit. That means:- Testing multiple model sizes in the same workflow.

- Adjusting workflows to exploit model strengths.

- Measuring performance in your production environment.

- Speed–accuracy trade-offs are architecture-dependent

- For latency-sensitive tasks, GPT-4.1-nano in Basic Agent delivered a solid 0.83 score at just 13.41s per query.

- For maximum accuracy regardless of speed, GPT-5 Mini leads in both architectures.

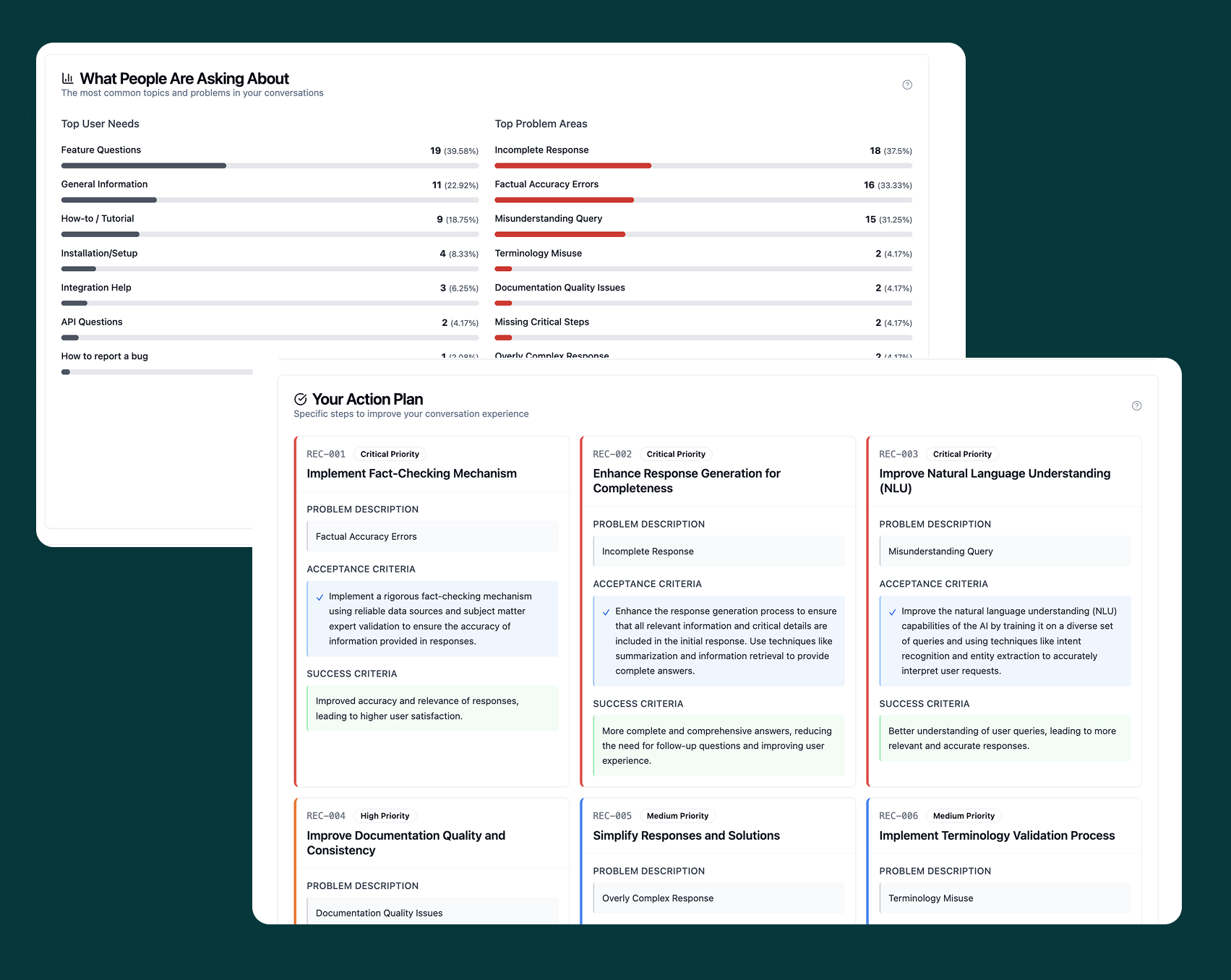

- Evaluation-Driven Development is essential

Without continuous evaluation, you’re guessing which combination works best. With it, you can:- Identify the optimal model–agent pairing.

- Avoid overspending on underperforming models.

- Continuously monitor and improve each system component.

- Cost savings without performance loss

Top performance doesn’t require the largest or most expensive models; optimally paired smaller models can deliver equal or better results. - The best approach is modular: route most sub-tasks to smaller models, reserving the larger models for rare, open-ended reasoning, scaling out with small experts, not up with one monolith.

Closing Thoughts

Chasing the biggest, newest model does not define success in enterprise AI. Adopting an Evaluation-Driven Development improves your chances of success.

Why? Because it gives you the ability to match the right model to your context, your processes, your architecture, and your data and to iterate fast with confidence

Without it, you’re flying blind; unable to see how each model performs in your context, which components help or hurt, how quality is evolving, or where cost savings are possible.

This is why many companies fail to productise their AI prototypes; they think they can just plug in the latest model and they will achieve a production-ready system. Our results show that “bigger” and “newer” don’t necessarily mean “better.”

An Evaluation-Driven Development approach changes that. It embeds measurement into every element of your AI system so you can test multiple models and architectures on your own data and understand which combination delivers the best results for your processes and KPIs. This level of observability enables you to measure real progress from day one and turn every signal - feedback, logs, system outputs, edge cases, user behaviour, and more - into actionable insights that directly inform and prioritise improvement plans.

In enterprise AI, success comes from knowing, not guessing, what works. And you only know if you evaluate. If you want to see how EDD can help you cut through the hype and build AI systems that actually perform in production, explore our Evaluation-Driven Development approach.

.png)